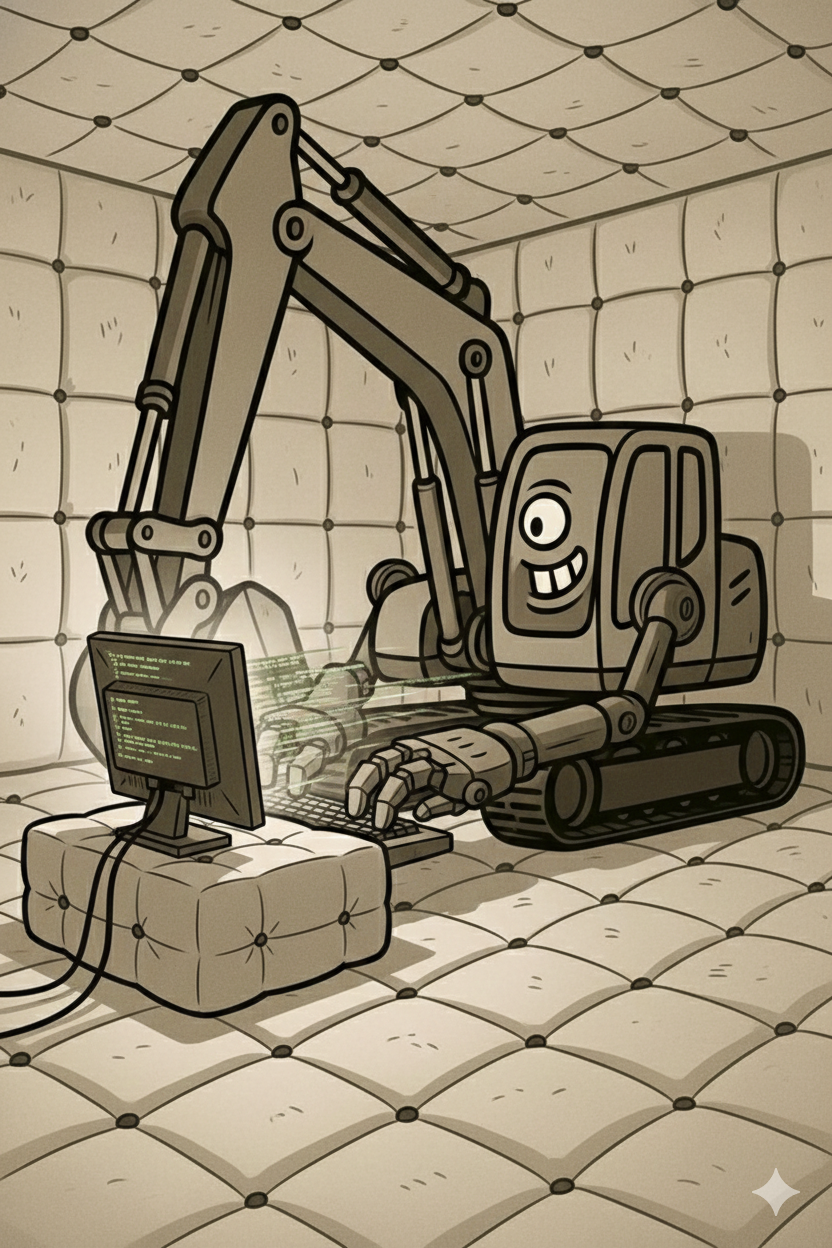

The Padded Room: Engineering a Safe Space for AI Agents

Key Takeaways

- Context is Constraint: We don't just ask AI to "code"; we feed it strict internal standards that define the "physics" of our development world.

- Operationalizing Methodology: Our Task Management standard forces agents to plan before they build, turning the "move fast" impulse into a "measure twice" workflow.

- The Capability Paradox: AI is brilliant at syntax but lacks the context to choose between a thousand ways to solve a problem. We provide the standards that mandate the *one* way we do it.

At Pairti, we view Large Language Models (LLMs) as highly capable technical engines with a specific limitation: they lack inherent architectural wisdom. They have instant access to every algorithm and design pattern ever documented, yet without clear boundaries, they can inadvertently introduce complexity. They know a thousand ways to solve a problem, but they require precise standards to ensure they apply the *right* one for your specific architecture. Without guidance, you'll get each and every approach eagerly implemented in the same solution. One approach is often enough, often preferred.

The solution is to put that hyperactive genius in a padded room.

Context is the New Physics

In the physical world, engineers don't have to remind a construction crew that gravity exists. It's a fundamental constraint. In the digital world of Generative AI, there is no gravity. The AI can hallucinate a library that doesn't exist, invent a new pattern that breaks your architecture, or decide that today is a good day to rewrite your entire state management layer.

To counter this, we treat our Internal Standards as executable context. We have codified our engineering culture into a series of core standards that serve as the "laws of physics" for any AI agent working in our repo. By defining these boundaries, we prevent architectural drift before it starts.

We expose these rules to the AI via an internally developed Model Context Protocol (MCP) client that exposes our methodology via a well-defined interface. While the AI interacts with these as structured data, the standards themselves are maintained in human-readable Markdown files using Zettlr for documentation and Zotero for rigorous citation management. This ensures that our "physics" are as accessible to human engineers as they are to the agents.

The Three-Phase Workflow

Our method ensures total alignment through a structured, three-phase progression:

- Phase 1: Discovery & Methodology – We clarify the "why" and "how" before a single line is drafted, ensuring the problem space is fully mapped.

- Phase 2: Architectural Design – We enforce strict patterns (like Riverpod 3.0) that the AI must operate within, removing the temptation to "improvise" infrastructure.

- Phase 3: Review & Verification – We apply automated checklists to define exactly when we are done, ensuring every commit meets our rigorous quality gates.

This isn't a suggestion; it's a directive. When an AI agent initializes, it is commanded to read the agent instructions and validate every action against our code review checklist. Compliance is the baseline.

The Thinking Gap: Operationalizing Discipline

One of the biggest risks with AI coding is the "spaghetti speed run." An AI can generate bad code faster than you can read it. To throttle this, we use our Task Management standard to create what we call the "Thinking Gap."

This standard forces the AI to slow down and articulate its intent. It must:

- Search for existing tasks to prevent duplication.

- Create a formal commitment in our backlog system.

- Plan its execution steps explicitly for human oversight.

- Execute the work within defined constraints.

- Verify against a rigid Definition of Done.

By forcing the AI to interact with project management tools (MCP), we transform a highly capable engine into a disciplined contributor. The AI has to explain what it intends to do before it does it, giving the human "foreman" a critical moment to intervene.

The Result: Innovation with Guardrails

This approach—leveraging AI's compliance for speed while maintaining absolute architectural control—allows us to harness raw power without the chaos. We get the velocity of automation with the clarity of disciplined intent.

We don't trust the AI to be wise. We trust it to be compliant. And by engineering the environment it lives in, we ensure that its compliance leads to excellence.